Module 1: GPU Hardware Basics

1.1 GPU Fundamentals

• GPU vs. CPU: Architectural Comparison and Application Scenarios

• Overview of NVIDIA, AMD, Intel GPUs (Datacenter vs. Consumer Grade)

• Major GPU Computing Architectures (CUDA, ROCm, OpenCL)

1.2 GPU Specifications and Selection

• Introduction to Tensor Cores, RT Cores, CUDA Cores

• VRAM Capacity and Bandwidth (HBM vs. GDDR)

• Impact of PCIe Lanes (PCIe Gen4 vs. Gen5) on Performance

• Multi-GPU Interconnect Technologies (NVLink vs. PCIe)

• GPU Selection Guide for AI / HPC Workloads (A100, H100, RTX 4090, MI250X)

1.3 GPU Server Hardware

• Comparison: Server vs. Workstation vs. Laptop GPU Deployment

• GPU Power Requirements (TDP, 8-Pin / 16-Pin Power Connectors)

• Cooling Solutions (Air Cooling vs. Water Cooling vs. Liquid Cooling)

• Rack Installation and GPU Server Environment Requirements

Module 2: GPU Assembly

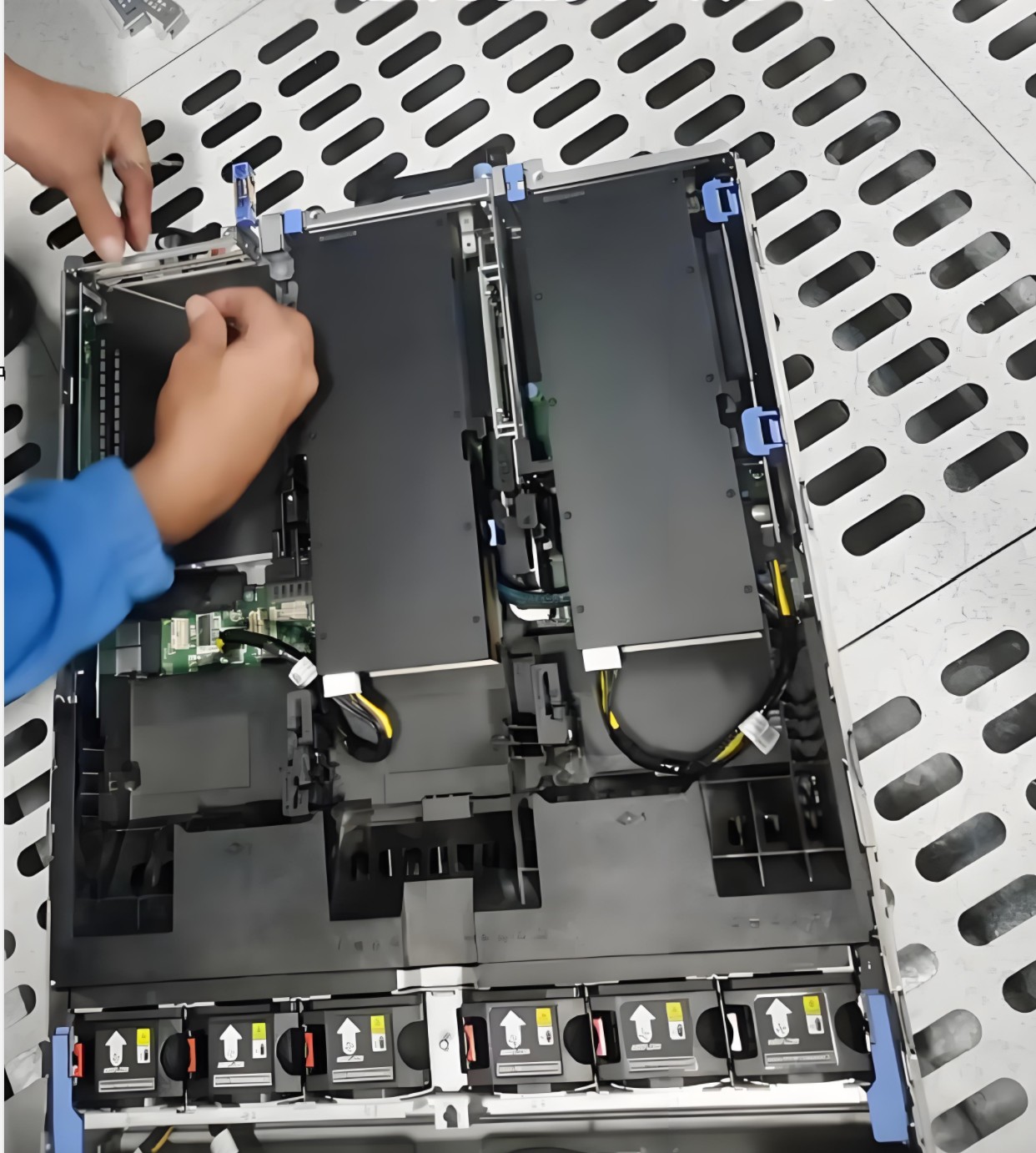

2.1 Physical Installation of GPU

• Selecting a Compatible Motherboard (PCIe x16 Support, Multi-GPU Slots)

• Installing GPUs in Server / Workstation

• Multi-GPU Setup (SLI / NVLink Bridge)

• Correctly Connecting Power Cables (ATX / Server PSU)

• Optimizing Case Cooling (Airflow Design)

2.2 BIOS / Firmware Configuration

• Checking BIOS Settings (PCIe Lanes, Resizable BAR)

• BIOS Updates and GPU Compatibility Check

• IPMI Remote Management for GPU Servers (Data Center Use)

Module 3: GPU Drivers and Software Environment

3.1 GPU Driver Installation

• Installing NVIDIA / AMD / Intel Drivers (Windows & Linux)

• Using CUDA / ROCm for GPU Computing

• Driver Version Management (NVIDIA Driver, CUDA Toolkit)

• Checking GPU Recognition (nvidia-smi, rocminfo)

3.2 GPU Computing Frameworks

• Deep Learning Frameworks (TensorFlow, PyTorch, JAX)

• GPU Acceleration Libraries (cuDNN, cuBLAS, TensorRT)

• GPU Job Schedulers (SLURM, Kubernetes + GPU)

Module 4: GPU Performance Optimization

4.1 GPU Performance Testing

• Monitoring GPU Status (Temperature, Power Consumption, VRAM Usage)

• Using nvidia-smi, gpustat to Monitor GPU

• Benchmark Testing (CUDA-Bench, Geekbench)

4.2 Overclocking and Power Management

• GPU Overclocking Tools (Precision X1, MSI Afterburner)

• Power Limit Management (nvidia-smi -pl to Set Power Cap)

• Cooling Comparison: Air vs. Water Cooling

4.3 Multi-GPU Parallel Computing Optimization

• Data Parallelism vs. Model Parallelism

• NCCL Library for Optimizing Multi-GPU Communication

• Load Balancing and Dynamic Scheduling Strategies

Module 5: GPU Fault Diagnosis and Maintenance

5.1 Common GPU Issues

• Crashes Due to VRAM Shortage (OOM Error)

• GPU Load Imbalance (Bottleneck Analysis)

• Thermal Throttling Due to Overheating

5.2 Troubleshooting Tools

• Using dmesg / journalctl to Check Driver Error Logs

• Generating Diagnostic Reports with nvidia-bug-report.sh

• GPU VRAM Testing Tools (MemTestG80 / CUDA Memtest)

5.3 Server-Level GPU Maintenance

• Regular Dust Cleaning and Thermal Paste Replacement

• Power Supply and Power Stability Checks

• NVLink Connection Inspection

Module 6: GPU Server Cluster Setup

6.1 Distributed GPU Computing

• Multi-Node Multi-GPU Configuration (RDMA, InfiniBand)

• Kubernetes GPU Container Management (Docker + GPU)

• Training Large Models with Horovod, DeepSpeed

6.2 Cloud GPU Computing

• Using GPU Instances on AWS / Azure / Google Cloud

• Cloud GPU Cost Optimization (Spot Instances vs. On-Demand)

• Cloud vs. On-Premise GPU Cost Analysis

GPU Assembly and Debugging